I’m tired of stupid people treating me like I’m an idiot

Why are the biggest backers of generative AI so incredibly stupid? And why do they think we're as dense as they are?

So much of my anger towards generative AI centers upon the inherent indignity of the technology, and the undisguised contempt that its most virulent supporters have towards human beings.

By now, you’ve used generative AI — either out of curiosity, or because a tech company forced it upon you, as Google did with its insipid AI overviews. Since you’re reading this newsletter, I wager you believe as I do: that generative AI is inherently shit and it can’t do what its proponents claim.

I didn’t have to convince you of this. This realization is one that you reached without any outside assistance. You asked ChatGPT a question, and it hallucinated something that you immediately knew to be false. You scrolled though TikTok and you saw some ghastly AI-generated video that, although superficially convincing, still had the tell-tale signs of something computer generated. Perhaps it was the slightly robotic affect of the people in the video, of the unusual way the characters delivered their lines, or the fact that they walked into each other like they were playing the original Doom with the no-clip cheat on.

You’ve seen this — all of this — and you know it’s shit.

You would have to be an absolute fucking cretin to think that this — an AI that tells you to eat rocks and glue — is somehow the future of technology. Not just that, but it’s going to single-handedly drive you into the throes of deprivation by taking away your job, and the jobs of everyone you know.

Seriously, who would be stupid enough, at this point, to think that there’s a future in generative AI, and it’s a future as grand as that promised by Chaucerian hucksters like Sam Altman, and Dario Amodei, and Satya Nadella?

Follow-up question: Have you been on LinkedIn lately?

It’s (Not) The End of the World As We Know It

On July 22, Microsoft Research published a paper called “Working with AI: Measuring the Occupational Implications of Generative AI.” It ended up driving a lot of clicks around the web, in part because it listed the occupations purportedly most likely to be affected by AI, and those most resilient to automation.

A lot of people — people who, thanks to the algorithm, appeared on my LinkedIn profile — posted it, and seemed genuinely terrified. Those were mostly people who worked in the industries deemed most vulnerable. There were also a bunch of ghoulish tech dipshits talking about the inevitability of this future, and why it’s pointless to resist, and why we should all be excited about handing the reins of human creativity and labor to a bunch of machines owned by Silicon Valley billionaires.

Not me.

I didn’t panic. I didn’t gloat. I laughed.

It was, unintentionally, the funniest thing I ever read in my entire life, and it raises the simple question: Did the authors of this paper speak to anyone in the fields which they listed as most likely to be doomed?

Top of the list was “Interpreters and Translators.” Admittedly, Google Translate is pretty good, especially compared to when I (unsuccessfully) used it to cheat on my French homework in high school. The thing is, Google Translate has been really good for a while now, and translators are still a thing, in part because translation and interpretation isn’t just about creating grammatically-correct sentences that match the wording of the original text. There’s context and inferred meanings — two things which AI is hilariously bad at understanding. There’s also creativity!

Sidenote: Coincidentally, someone who works as a translator made a very good point in a comment to an earlier article, which I’ll share below.

“Every translator who, increasingly, for years now, has been told "Oh, but MT is making your job easier" as an excuse for paying us less, with the result that shoddy translations are being presented to the end client by translation agencies who just don't give a fuck about quality and who claim that having "a human in the loop" is the same as actually translating the underlying meaning of the text.

It's not. It's not good enough. It's not even remotely close. It's biased in so many ways, and it's built on the stolen intellectual property of millions of authors, artists and musicians and on ghost work by people all over the world.

It impoverishes our societies, reduces the best of human culture to pallid, third-rate copies and facilitates the creation of the vilest, most toxic content the worst of us can conjure up.

And the environmental costs, in a time when we're all already seeing the undeniable impact of human-made climate change, are simply intolerable.”

I actually used to live in France (and the French-speaking part of Switzerland), and I can actually speak the language, and occasionally I’ll look up French translations to see how certain quirky bits of writing made the jump. Bits where there’s no immediately obvious or graceful way to do a literal translation.

The Harry Potter series is a good example. In French, Hogwarts is Poudlard, which translates into “bacon lice.” Why did they go with that, instead of a literal translation of Hogwarts, which would be “Verruesporc?” No idea, but I’d assume it has something to do with the fact that Poudlard sounds a lot better than Verruesporc.

Someone had to actually think about how to translate that one idea. They had to exercise creativity, which is something that an AI is inherently incapable of doing. And I haven’t even mentioned the other facets of being an interpreter.

The guy who sits between President Trump and whatever foreign dignitary he’s meeting, and is forced to translate the awkward bits, like when Trump forgets said dignitary’s name and instead comes up with “Mr Japan” or something. That guy is someone who has been vetted, both in terms of his skills, as well as by the security services.

Do you really think that, given the highly-sensitive nature of international diplomacy, the US government — or any sane government, for that matter — would be willing to hand over his role to an AI model? Behave.

The second item was even funnier. Historians.

HISTORIANS. Are you fucking kidding me? An AI that hallucinates more than one-third of the time when answering pub-quiz level questions is somehow going to interpret and explain history?

And that’s without asking other questions, like: “How is that historian going to access and understand written archives that haven’t been digitized, and aren’t in its training data, and that it can’t grab through RAG?” Or, “how will it interview primary sources?”

Or even: “How will it interpret the vast amounts of data that a historian accesses as part of their job, determining the strength and accuracy of each account, the biases and perspectives underpinning each account, and then accurately turn them into something that explains a period of history that is either under-reported, or hasn’t been explored in any real depth.”

Historians, I add, were ranked higher than writers and sales representatives, the latter of which faced high levels of automation even before generative AI stumbled into the public’s consciousness.

It gets funnier as you move along, with Microsoft listing “News Analysts, Reporters, Journalists” as one category of job at high risk of AI-enabled extinction.

Ah yes, the technology that underpins Google’s spectacularly inaccurate AI Overviews — which The Atlantic called a libel machine for its propensity to hurl untrue allegations of serious (and sometimes criminal) wrongdoing against innocent people — is somehow going to report the news.

The same technology that Apple used to summarize notifications, only for it to be pulled after making things up (like claiming that Luigi Mangione had killed himself), is the next Woodward and Bernstein, is it? Fuck off.

Admittedly, on a factual basis, it’s hard to imagine AI being worse than the Daily Express or Newsmax. But these institutions are, mercifully, outliers (out-liars?), with the rest of the media ecosystem largely trying — though often falling short — to report the news accurately, though perhaps not always fairly.

Sidenote: Like I said in a previous newsletter, everyone has their biases, myself included. The whole idea of a truly-impartial media is an illusion that only children believe in, and if you’re one such person, I can only ask why you’re reading this article instead of watching Paw Patrol.

The problem with AI isn’t that it won’t report the news accurately, but rather that it can’t because it doesn’t actually understand anything — not even the words that it regurgitates after burning the equivalent of a small Pacific island’s annual energy consumption on a single prompt.

And that’s without having to ask basic questions like: “How will an AI be able to interview a source, especially in-person at an event?” Or, “Will said AI be able to push back when an interviewee is obfuscating or lying, or being evasive?”

(Admittedly, some reporters don’t do that — Hi Casey! Hi Kevin! — but that doesn’t mean it’s something we should settle for).

The list goes on, and on, and on, and the laughs only grow louder. Mathematicians? You mean the same AI that can’t count the number of times a certain letter appears in a word? Economics teachers? Models?

Are we supposed to fall in love with GPT-5o’s long-legged training data, are we? Does Microsoft think that we’re all about to develop an irrepressible fetish for people with six fingers, or fingers that grow out of their fingers?

Are these people insane, or do they just think we’re stupid?

A Rare Bit Of Hope

I’ll freely admit that this newsletter isn’t always the cheeriest read. When I used Malcolm Middleton as the soundtrack for one post, you knew that things were bleak, and what you would read would send you reaching straight for the Zoloft.

So, let’s change that. Here is what I believe.

AI is not going to take your job.

The people who claim that AI will take your job are either profoundly stupid, or profoundly dishonest, and either way, we shouldn’t listen to them.

How do I know that AI isn’t going to take your job? Firstly, because it hasn’t already.

We’re three years into this shit. OpenAI is now, allegedly, a $300 billion company — although, I imagine that if it had to publish an S-1, that valuation would be slashed to something far, far more reasonable in a matter of days.

Sidenote: Think I’m joking? Just look up what happened to WeWork when it tried to go public.

In the first half of this year, the big hyperscalers — Microsoft, Amazon, Google, and Meta — have spent more than $200bn on data centers and the associated hardware, in part to service the demand from generative AI companies. The real figure (when you add in smaller companies like Coreweave, Crusoe, and latecomers like Oracle) is almost certainly much, much more.

Let’s assume this capex spending continues at the same pace for the rest of the year. For the sake of simplicity, let’s assume that the genAI-related capex spending will hit $400m this year. That’s only slightly more than the entire economic output of Romania — a country of 19 million people that’s also an EU and NATO member.

That’s a lot of money. Surely we’d be seeing something now, right? And I’m not including layoffs that would have happened anyway, but AI provided a perfect excuse, or layoffs that were quickly followed by massive offshore hiring.

Where are the AI-related dole queues? Why haven’t I seen anyone on the street holding a sign that says: “Hungry and homeless — Sam Altman took my job?” Why do I still see jobs for writers and editors on LinkedIn? Why is this apocalypse not all that apocalyptic?

Could it be that generative AI is not really that good at… anything?

When I worked for (an unnamed publicly-traded tech company), I was told to use a tool called Jasper.ai to produce bland promotional emails for webinars and stuff. The kind of brain-dead work that I had no desire to do (and, indeed, hadn’t done previously until the content teams were restructured), and it was the biggest load of crap I’ve ever encountered, to the point where — despite instructed to use said AI tool to save time — I just went rogue and wrote everything myself.

AI can’t even do generic marketing slop well.

Another question, if AI models are going to replace humans, why are they still basically the same — at least, in terms of performance — as the first GPT-3 model we encountered in late-2022? This is subjective, but every time I use ChatGPT or Claude to see what the latest version can do, I inevitably walk away feeling confident in an eventual triumph of humanity over the machines.

This is crap. Everyone can see it. Everyone knows what AI-generated content looks like from the outset, and more to the point, everyone hates it. Why are we doing this? Why have we allowed ourselves to believe in the inevitable AI dispossession of people?

Why is it that every attempt to replace a human with an AI model is either a hilarious failure, or basically fraud?

Like Builder.ai’s AI coder that was, in fact, a bunch of human engineers working out of India.

Like Amazon’s AI-powered supermarkets that were, in fact, run by a bunch of people in India.

Like Devin, the AI coder that was, in fact, not very good and marketed in a questionably ethical way (by lying — I’m saying that Cognition Labs lied).

Like when Klarna fired its customer services workers for AI, only to hire them back after realizing that LLMs kinda suck.

Why is it that software engineers have started to sour on generative AI tools?

To be clear, I’m not saying things are bad out there. They are. Hiring is stagnant, layoffs are up. But if you think that AI is the reason why things are bad, then you’ve been the victim of a massive, massive con, perpetrated by the most stupid and venal people ever to grace the face of this planet.

What’s more likely: An AI model that can’t count letters and still makes shit up is taking your job, or that AI’s being used as an excuse to shroud behaviors that most people with a moral core find objectionable, like offshoring and slashing jobs — forcing those remaining to do the work of several of their former colleagues — for the sake of shareholder value?

If you’re working in media, you know things are bad. You also know that things have always been bad, especially since the 2000s. What’s more likely — that publications are replacing staff with AI, or that the fundamentals of the media business are only getting tougher, especially as traffic from search and social media dries up.

Google’s AI overviews — which are dogshit, by the way — are absolutely savaging traffic to sites, according to a study from the Pew Research Center. Google, for the sake of fairness, denies that it is, and I’m choosing not to believe it because I wasn’t dropped on my head as an infant.

There’s no salvation in social media, either. Facebook has been dead for a while, and Twitter is useless unless you actually pay for Twitter Blue — though I have no idea how useful it would be to pay for a checkmark, and I have no desire to find out. Tech companies still consume nearly three-quarters of all digital ad revenue, with publishers left to fight over the scraps.

Events? They’re recovering after Covid, but it’s still a long process. Those ancillary businesses that newspapers used to have — like classifieds and dating profiles — are gone, have been for decades, and they’re never coming back. And I haven’t even mentioned the private equity-ification of media in the US, where companies buy titles, slash headcount to a skeleton level, and keep the desiccated remains on life support for as long as they deem fit.

This is what’s taking media jobs. Not AI. The grim reality of an industry that hasn’t quite managed to adapt to the digital world, and that’s routinely fucked with by trillion-dollar tech companies for their own benefit.

Yes, Business Insider is now using AI, cutting more than 20 percent of its workforce in the process. G/O Media continues to flirt with AI to write articles. I guarantee you that both of these experiments will end in failure and a feast — a royal banquet — of humble pie being consumed. Well, perhaps not in the case of G/O Media, but only because it’s shutting down and leaving the media space.

I know some incredible journalists. People who you probably know, and others who aren’t the kind of high-profile reporters at the New York Times with tens of thousands of followers on Twitter and BlueSky.

I’ll name some of them. Gareth Corfield. Dan Swinhoe. Abi Whitstance. Adam Smith. Richard Speed. The list goes on and on. The idea that an AI model — one that doesn’t understand the literal meaning of words, and just regurgitates what it’s seen before, or makes predictions based on language it’s seen previously — could replace them is, quite frankly, one of the most obscene insults you could throw at a person.

Coding? Possibly, if you don’t care about the output, and if you don’t care about learning anything, or having stuff that you understand (and, thus, can maintain). In reality, these AI assistants aren’t replacing anyone — because they can’t — but are instead doing menial grunt work, like writing boilerplate code, or tests, or comments.

Agents? You mean the same agents that are built on the same hallucination-prone foundations, and that invent entire baseball teams in the middle of the Gulf of America, and that arguably cost as much as a human being (and possibly more), although work slower and can’t do multi-step tasks with any reliability?

Genuinely, having read all this, and taken the time to read other voices (like Gary Marcus and Ed Zitron), If you still think that AI could replace you, then please see a therapist.

Liars, Idiots, and Idiot Liars

I use the phrase “AI bubble” because it’s something that people can relate to, and can actually understand. It would, however, be more accurate to describe it as “the AI con.” Every bit of hype that you’ve read, and all the profligate spending we’ve seen, is because a bunch of liars lied to other liars, who may or may not be a bit simple, and those liars hope we’re as dumb as them.

In the next section of this newsletter, I’m going to permanently damage my employment prospects in the technology industry.

Jensen Huang is the leather jacket-loving CEO of Nvidia, and probably the only person to have actually made serious money from the generative AI boom, in part because he’s selling the GPUs that these models need, and those GPUs are very, very expensive indeed. As a result, he’s directly incentivized to gin up fear and hype about generative AI — because the more people that believe generative AI is the future, the more that he’ll make.

As I wrote in my last post, Huang went on the All-In podcast — hosted by some of the worst people in the world — and said that people’s success in a post-AI world will be determined by how much they use AI (no doubt, running on hardware that his company designed and sold).

"We also know that… although everybody's job will be different as a result of AI, some jobs will be obsolete, but many jobs will be created. The one thing that we know for certain is that, if you're not using AI, you're going to lose your job to somebody who uses AI. That, I think, we know for certain. There's not a software programmer in the future who's gonna be able to hold their own typing by themselves,” he said, as quoted by PC Gamer.

Separately, Thomas Dohmke, the CEO of Github, the Microsoft-owned source code management platform, recently tweeted that: “Either you embrace AI, or get out of this career.”

Dohmke — who, from what I can tell, hasn’t coded as a day-job for well over a decade — also added that “AI is on track to write 90% of code within the next 2–5 years.”

You may wonder “what is the evidence for this,” and I’ll tell you. A field study, performed by Github, of 22 developers. An embarrassingly small sample, and one that I’d question the methodology of, had Github even bothered to publish it.

Sorry, no. We’re not going to let this slide. 22 developers? Are you kidding me?

We’re supposed to “embrace AI, or get out of [software development]” on the basis of the opinions of 22 fucking people? People who, I add, likely already have a favorable disposition towards generative AI on the basis that they a.) didn’t see it diminishing their role as developers, b.) half said that AI would write 90% of code in two years, and c.) the other half said that would happen in five years.

Dohmke, as CEO of Github, presumably owns a fair bit of stock in Microsoft (its parent company), and thus, stands to personally benefit from people actually believing AI is going to replace software engineers (at least, with respect to coding, which only accounts for a fraction of the actual work that a software engineer actually does).

And yet, if you peel back the layers just a little bit, you can see that it’s all nonsense that doesn’t hold up to even the slightest bit of scrutiny.

I don’t know whether Dohmke and Jensen are stupid. I actually think Jensen is pretty smart, insofar as he’s managed to position Nvidia at the trough of every single hype bubble over the past decade or so, growing fat off crypto, and now AI. But I don’t believe that they actually believe the stuff they’re saying.

I don’t believe that, under any other circumstances, Dohmke would make sweeping statements about an industry based on the opinions of 22 (presumably selectively-chosen) people. I believe he is doing so right now because he benefits from it, and because he wants to convey an image of AI’s inevitability.

Dario Amodei, meanwhile, genuinely causes me inner turmoil as I’m forced to wrestle with the question of whether he’s an idiot, a liar, or a lying idiot. Earlier this year, he told Axios that AI would eliminate half of all entry-level roles in the coming years. Here’s a couple of quotes from the interview:

When talking about a possible scenario, based on his projections of AI development, he said: “Cancer is cured, the economy grows at 10% a year, the budget is balanced — and 20% of people don't have jobs."

Amodei is doing what any hypeman does here: generates hype, whether that is based on fact or fiction. I don’t know whether he actually believes this, or whether he’s just saying whatever insane shit pops into his head whenever a dictaphone passes his way.

Whatever. Who I do believe are stupid are the journalists who unquestionably reported this without even cushioning it with the caveat that it was a.) based on absolutely nothing whatsoever, b.) said by someone who stands to benefit from people believing that lie.

Someone who I genuinely believe is stupid is Masayoshi Son, founder of Softbank, who is ploughing insane amounts of money (or, at least, pretending to) into OpenAI, right at the apex of its most ludicrous valuations. Here’s some hilarious quotes that I grabbed from a Light Reading article while researching this piece.

“The era when humans program is nearing its end within [the Softbank Group]. Our aim is to have AI agents completely take over coding and programming."

And:

“There are no questions [AI] can't comprehend. We're almost at a stage where there are hardly any limitations.”

And my personal favorite:

“[AI hallucinations are] a temporary and minor issue."

I could regurgitate the usual stuff — that hallucinations are a feature of current LLM technology, and that said LLMs don’t understand anything, but rather make guesses about the right text to use at the right moment — but you’ve already heard all of that. Instead, allow me to be brief.

Hey, Masayoshi Son. You lost $16bn on WeWork — a company founded by someone who looks like every slightly-too-old Australian backpacker in every hostel I’ve ever been to. You don’t get to talk. Ever.

I’m going to talk about Satya Nadella now, partly because he said some dumb shit, but also because I want to ask a really important question: Am I the only one who thinks he looks like an Ozempic version of Gregg Wallace?

Picking out the dumbest thing Nadella has ever said about generative AI is sort of like trying to find the most irritating screaming baby on a plane. That said, there are some strong candidates.

“I don’t need to see more evidence ... to know that this is working and is going to make a real difference,” said Nadella in 2024, according to CNBC, when talking about AI boosting productivity.

That’s fortunate, because a survey of 2,500 professionals published that very same year showed that the vast majority — 77% — say that the AI tools their bosses insist upon them using have “actually decreased their productivity and added to their workload.”

There’s also this classic line from an interview with Wired’s Steven Levy: “sometimes hallucination [are] ‘creativity’”

Again, I’m not going to repeat how LLMs work, even though it would directly address why the “creativity” argument is so spectacularly stupid, but I would like to ask that a lawyer please use this line when they next get caught submitting an AI-generated filing. And please let me know how you get on.

Nadella is an idiot, and while he’s not as bad as Steve Ballmer was, I also think that’s only true if we use the word “yet.” There’ll come a point when all this stuff comes crashing down, and Nadella will have to answer difficult questions about why Microsoft spent hundreds of billions on data centers pursuing something that, in reality, was never going to be as big as its proponents claimed.

Masayoshi Son is also an idiot. Jensen Huang and Thomas Dohmke are, in my estimation, shameless liars. Amodei, I reckon, is a bit of both.

The Insults At The Heart of the AI Con

It’s so insulting. First, the idea that an LLM — something that literally just guesses about what characters to write in a piece of text, or a block of code — could replace a human being with creativity and soul and knowledge is profoundly offensive.

It’s also, as we’ve witnessed all too often, completely untrue. I’m not just talking about the cases where a company used AI, only for it to blow up in their faces. Even the little things — the AI-generated text or imagery that you just know is AI generated, and so you click away, or think worse of the creator or the company that used them — demonstrate how this technology cannot replace human labor.

But what makes it worse is that this lie — this insult — is being said by people who do not understand the job they seek to replace, or the people they plan on making destitute.

When do you think was the last time Satya Nadella wrote a line of code? Mark Zuckerberg stopped contributing code to Facebook in 2006. Sundar Pichai is a former McKinsey consultant — a company best known for turbo-charging the opioid crisis, and the 2008 financial crisis.

Do you think any of the people who wrote that Microsoft Research paper were historians? Or translators? Or reporters?

Of course not.

That, by itself, is another massive, smash-mouth insult. “I don’t understand your job, but I’ve decided that this machine that constantly makes things up is perfectly capable of replacing you.”

These people are not bright, or decent, or moral. They’re not good people. If I sincerely believed that the technology I was building had the potential to bring about Great Depression levels of unemployment (which, to go back to Amodei’s quote from earlier, is what a 20-percent joblessness rate would look like) I’d have serious pause. I’d think: “Is this a good thing?”

I’d also ask: “Societally, are we ready for these levels of joblessness?” And — given the fact that welfare programs have been slashed to the bone in the UK and the US, and are only getting more threadbare — I’d conclude: “probably not.”

Seriously, when Masayoshi Son says that the era of the human programmer is over, why are people actually listening to him? Especially after WeWork.

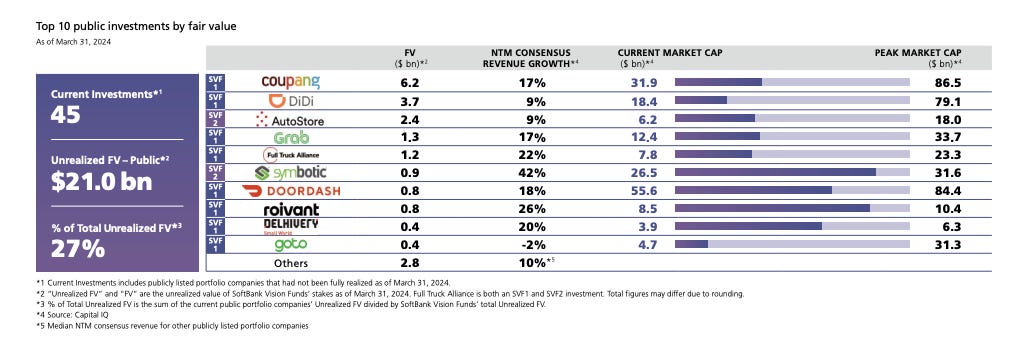

Have you actually looked at the stuff that’s in the Softbank Vision Fund? As of the 2024 annual report, every single one of the ten largest publicly-traded companies in the Vision Fund were trading below their peak market caps. Six of those companies were trading at half the peak value.

Masayoshi’s career has been a Sideshow Bob rake-smash after rake-smash, and I genuinely don’t understand why that isn’t mentioned in any of the articles about his gloomy predictions for the labor force. That strikes me as some important context that the reader should know.

None of these people are geniuses — though they’ve certainly managed to craft the image of being incredibly smart, and that’s why people actually believe them when they say stupid things, or things that are, with the most minimal of scrutiny, obviously false.

These people believe they’re smarter than us, but they aren’t. They’re deeply, deeply mediocre human beings whose sole accomplishments boil down to “being in the right place at the right time.”

And we don’t have to listen to them. To let them scare us is a choice, and it’s one I refuse to make.

And you shouldn’t either.

Footnote

I’ve got a few stories in the pipeline, but the big one — and the one I’ve been wrestling with for the past few weeks — is about what it means for the Internet to die. I wanted to publish it this week, but given the stuff with the Online Safety Act, there are a few bits I want to add/expand upon.

It was my birthday on Monday. If you want to soften the blow of the fact that this is my last year of being closer to my twenties than my forties, feel free to buy a paid sub. You won’t get anything, other than my undying affection. At least, for now.

Quick newsletter recommendation: My old The Next Web colleague Callum Booth writes fun, sardonic shit about tech on The Rectangle and it’s well worth a read.

Feel free to email me at me@matthewhughes.co.uk, or to follow me on Bluesky.

I feel like you are fundamentally missing the point of generative AI, from the point of view of the companies investing in it. This was never once about the quality of the output, it's about the velocity of the output. The goal has never once been to make content that is good. The goal has been to make content that is good enough and fast enough, to bury human generated content under a mountain of AI generated garbage. Thus devaluing the human labor, to make the market more favorable to the tech giants controlling the direction of the market.

Let me give a simple example. Let's say you are a talented programmer, who happens to have specific knowledge of a niche market. You write a tight, robust, efficient application, that addresses several common pain points of that niche market, in a desktop tool, that runs locally, which you sell at a reasonable price. Traditionally, this is a fantastic recipe for success, for you, the developer.

But that desktop tool doesn't push subscriptions. It doesn't drive AWS or Azure contracts. No VCs get returning revenue from a SAAS model. Meta, Google, Amazon, Microsoft and so on, dont make a penny from it. It is useless to Silicon Valley, from their point of view. But what if every single company in that niche market, instead of buying your tight, robust, efficient application, could instead be sold on a subscription, to have an AI make them a bloated, buggy, staggeringly inefficient, tailored solution to their personal specific problem?

Now we're talking! Data center contracts. Recurring revenue. GPU sales for lots of compute! Sure, it is in every way a worse solution to the same problem, but when everyone in the entire market is making their own bespoke solution, how is anyone ever going to find your tight, efficient, robust application?

That's their perspective. Not that the output of AI is good. It's that the output of AI is good FOR THEM.

Any teacher who’s had to read and grade an AI paper can tell you that it’s awful—generic, boilerplate language, hallucinated quotes and sources, and SHORT. Trying to get AI to stay on task with any train of thought is impossible. If you ask the AI to write a 250 word blurb it can do it; a 500 word response is probably going to repeat itself a bit; and a 1000 word paper will say the exact same argument every paragraph, no variation or increasing depth. There is just no depth to it. It’s wide as an ocean, deep as a puddle, and it’s piss. It’s a puddle of piss.