Do we have a moral obligation to give Sam Altman a burning wedgie?

It's complicated!

Note from Matt: Happy Wednesday. This isn’t a proper newsletter — I’ve got a sufficiently morose one in the pipeline — but rather me talking about a piece I contributed to a philosophy journal about AGI, and a podcast I appeared on that just went live.

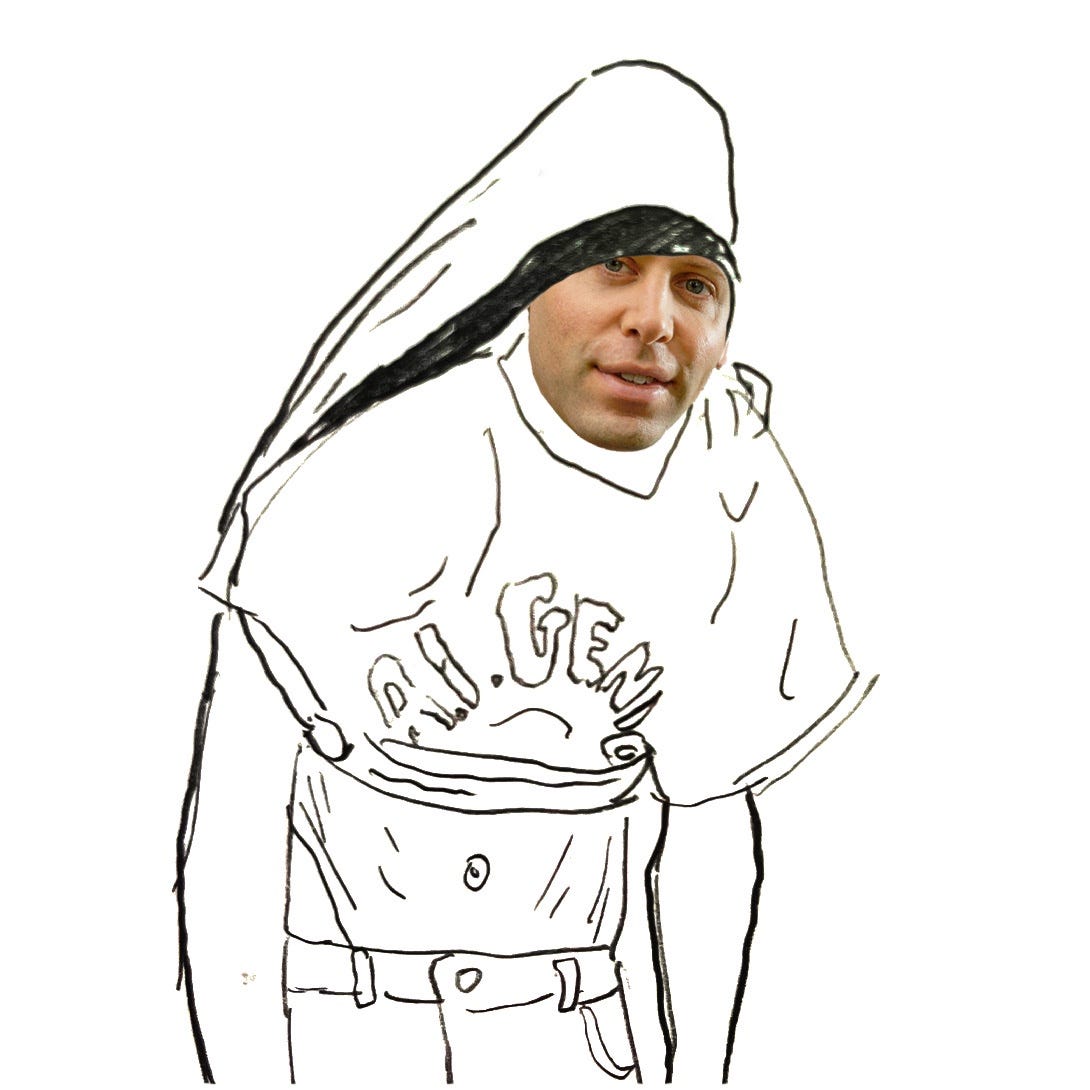

The featured image was created, in very short notice, by the incredibly talented Sarah Dyer on Bluesky who responded to a post I wrote that basically said, “hey, I’m bad at art, can someone photoshop me a pic of Sam Altman getting wedgied?” She has my eternal gratitude.

Anyway, on with the show.

A few weeks ago, I received a LinkedIn message from a former colleague at The Next Web, Tristan Greene. His employer was launching a new publication that examined AGI (artificial general intelligence) through the lens of moral philosophy, and would I like to contribute a piece to the first edition?

Naturally, I said yes.

Anyway, that new publication — AGI Ethics News — launched earlier this week, featuring 1,200 words penned by myself (incidentally, possibly the shortest thing I’ve written this year).

You might remember, but in the first post of this newsletter, I talked about my educational and professional background, and the various factors that drove me to start writing What We Lost. It was a long and winding piece, which one random dude described as a “slog” — which, to be honest, is fair — that went back to my childhood.

Without reading the entire thing, I think I mentioned in that piece how the sixth form college I attended withdrew the CompSci course I wanted to study right before I started it. It also refused to allow me to study any of the sciences — a prerequisite for medicine, which I was also interested in — forcing me to take English Language, French, and Theology and Philosophy.

It was the latter that I actually performed best in (which is hilarious for two reasons, as during those two years, I went from being a devout Catholic to a convinced atheist. Also, I would later move to France and spend the majority of my adult career working as a writer. Life can be strange!).

It turns out, examining the fuzzy, often contradictory world of human morality is really interesting! The whole idea of “right” and “wrong” is subjective and conditional — and any absolutist approach naturally crumbles when presented with edge cases and contrived hypotheticals designed to test the limits of any unflinching stance.

Would you kick a kitten?

No! Never! That would be terrible! And, besides, someone might capture it on their CCTV cameras and turn me into a national pariah, just like Cat Bin Lady was in 2010 (arguably one of the weirdest moments in British history, and one I’m stunned that nobody on YouTube has created a 50-minute retrospective documentary examining. This clip from Charlie Brooker’s 2010 Wipe will have to suffice).

But would you kick a kitten if it was sitting on the cure to cancer, and said kitten refused to move out of the way, and the only way to get the cure to cancer — and thus, save the lives of millions of people — is to Diego Maradona the little furry shit into the stratosphere?

It’s a different question, isn’t it? One with very different implications, and a much harder ethical calculus to parse. Kicking the kitten — that beautiful, helpless kitten — would be wrong in either scenario, but would depriving humanity of the cure to cancer be even more immoral?

Let’s examine it from another perspective. Given that kicking the kitten — thus unearthing the cure to cancer — would result in a greater good for humanity, are you therefore morally obligated to kick the kitten? Would not kicking the kitten be an immoral act — one that’s more wrong than kicking it in the first place?

You (Immanuel) Kant Wedgie A Tech CEO

You’re probably wondering what all of this has to do with AGI — a term that nobody really agrees on its definition, and that remains purely hypothetical, and describes something that may never be fully realized?

Well, the thing is, AGI could — if we believe the proclamations of people like Sam Altman (depending on the year) or Geoffrey Hinton — result in humanity’s bright candle being snuffed out. Homo sapiens would join dodos and dinosaurs on the list titled “things that used to exist, but no longer do.”

I’ll be honest — I think AGI has about as much chance of wiping out humanity as ChatGPT has in wiping out software developers, which is to say, none. But if we assume that Altman isn’t just a floppy-haired fabulist that idiot New York Times columnists take seriously, we’re left with a really interesting question.

Do we have a moral obligation to take action to stop the rise of AGI — or, for those with the requisite technical skills, to intervene and ensure that AGI doesn’t become the apocalypse-causing nightmare that compute-hungry carnival barkers like Altman insist it will?

That, in essence, is what I explore in my piece of AGI Ethics News, titled: “Do we have a moral obligation to stop dangerous AGI?”

Let’s assume that the chance of AGI wiping out humanity is 10% — with the remaining 90% being the fully-automated luxury dreamworld that the techno-optimists believe it will — do we have the same moral obligation to act as we would if the chance was 100%?

10% is a big number — for context, the New York Times gave Donald Trump a 25% chance of winning the 2016 US Presidential Election — so let’s make it smaller.

Suppose there’s a 1% chance that AGI leads to the destruction of humanity. Do we still have the same responsibility to do something? What if the odds shrink further to just 0.01%? Hell, let’s add some more zeroes and make it 0.000001%?

Where does the obligation start, and at what point can we breathe a sigh of relief and go back to our doomscrolling?

And if we have a moral obligation to act, what does that actually look like in practical terms?

My piece references a Forbes article that describes a bunch of MIT and Stanford students that dropped out because they believed in the imminency of AGI, and its existential risk, and wanted to do something.

Some got jobs at OpenAI (motivated, I’m sure, a desire to reform the institution from the inside, and not, say the fact that OpenAI pays ludicrously well, even when you don’t factor in its kind-of-but-not-really equity sharing program), and one guy started work at a think tank that focuses on AI safety.

Whether these actions are effective is a different question. The point is, they acted because they felt an impetus to do so — whether that impetus was altruistic (a desire to protect their fellow human) or self-serving (a desire not to end up on the pointy end of a T-1000’s sharpened liquid metal finger).

My piece spends a lot of time referencing Peter Singer, in part because he’s the philosopher most readily identifiable with discussions of individual moral responsibility in the face of big, intractable, systemic problems — whether that be animal rights, or the scourge of global child poverty.

I also ask — though, for obvious reasons, I’m very careful with my words — what actions are justified, should we assume that AGI is the existential threat that people believe it is.

Singer’s early writing on animal rights — most notably his 1975 book, Animal Liberation — talked about an individual’s obligation to reduce the suffering of sentient animals by, for example, abstaining from meat and animal products.

Shortly after, we saw the emergence of an animal rights movement defined by its willingness to engage in direct action attacks against fur farms and science laboratories, performing acts that broke the law and sent many of its members to jail.

Although Singer stopped short of condoning any law-breaking in Animal Liberation, he would later address the direct action wing of the animal rights movement in his book Practical Ethics, where he acknowledged that in certain circumstances — particularly those where you avoid causing any physical harm to a human being — it can be moral to break the law in furtherance of reducing or ending the suffering of an animal.

And so, if AGI will result in a Terminator-style hellscape, what acts are off the table? Does the threat of AGI justify acts that, in normal circumstances, we’d consider to be immoral?

Would you give Sam Altman a wedgie — a really nasty, arse-stinging wedgie — if it made him question his work on AI, resulting in him deciding to retire peacefully to his apocalypse-proof underground bunker in New Zealand or whatever?

Assuming the existential threat of AGI, do you have a moral obligation to give Sam Altman that wedgie, turning his Hanes undercrackers into a SnapBack? Would not giving Sam Altman a wedgie be, itself, an evil act?

And how big a threat must AGI become before that moral obligation to grip the elastic on Altman’s briefs and raise it up into the sky, like you’re vibing away at a non-denominational megachurch, finally emerges?

Morality is complicated!

For legal reasons, I should say that I do not condone giving anyone a wedgie. And I say that not simply because I have some nasty PTSD from my high school days.

Anyway, you should check out AGI Ethics News, and absolutely read the thing I wrote for them.

One last thing: My mate Tristan — God love him — was very firm that AGI Ethics News had a hard 1,200 word limit that, under no circumstances, could I cross. For those who’ve read my stuff over the past few months, you’re undoubtedly (and painfully) aware that my stuff has a tendency to go on, and on, and on.

Tristan, having worked with me, and having edited some of my stuff at TNW, knows what I’m like. Which, I imagine, partially explains his firmness.

Hitting that limit, therefore, required me to resist the very same temptations I indulge with every new article I publish.

Side note: Tristan is American. When I worked with him, I regularly said “You’re Tristan my melons, man” — a reference that he, as an American, absolutely did not get, but one that my British readers undoubtedly will.

This newsletter — which I literally wrote to tell you, the reader, to check out this new publication and the thing I wrote for it — is already nearly 1,500 words long — or 300 words longer than the thing itself I’m trying to promote.

I can’t help myself.

As an aside, if anyone’s reading this and wants to pay me to write words for them — I’m a freelancer, after all, and one that’s utterly fucking shameless — feel free to drop me an email (me@matthewhughes.co.uk) or get in touch on Bluesky.

I have another thing to plug.

Remember how I said, a few newsletters back, that I was interviewed for a new podcast by a guy called Myles McDonnough?

Well, that podcast is now live. I get animated and I say “fuck” a lot, because when I’m angry and passionate, my language devolves to somewhere between “Jerry Springer guest” and, to quote Malcolm Tucker, “a hairy-arsed docker after twelve pints.”

I talk about why generative AI could never be beautiful, and why even the most flawed human efforts will always surpass those, on an aesthetic level, than those generated by machine.

I like Myles. He’s a good guy and you should subscribe to his show.

Also, yes, my accent is absolutely fucked. I’m aware. It’s what happens when you take a Scouser, move him to the North East, then send him to Europe, and then give him an American wife.

Oh, one last thing.

Another plug, though one that isn’t something I’ve written or said (or, if you’re being uncharitable about my accent, grunted).

My taste in music could be described as “tattooed depressed dads with a sertraline prescription.” William Fitzsimmons is, and always will be, my favourite singer songwriter, and I’ve seen him live at least four times, including twice in London, a city that, as a professional Northerner, I do my utmost to avoid.

And so, it was almost fate when I stumbled upon Jeff Janis — a genuinely beautiful singer-songwriter who, yes, has an impressive beard (though, in the absence of his medical records, I can’t confirm the sertraline prescription) — on BlueSky the other day.

His stuff is, in a word, gorgeous. It’s one part City and Colour, one part Bon Iver, and one part Zack Bryan. You can listen to his latest album for free here.

And I strongly encourage you to do so. It’s great stuff.

In Singerian fashion I'm already donating 50% of my salary to the Go Fund Me "Infinite Wedgies for Altman" which'll make this happen. Without such support, we can't rely upon a horrified superintelligence arising, which could simulate and devestatingly shear it's creator's perineum for all eternity.

I'm willing to experiment with whatever until we figure out what makes AI cultists Not Like That.

Thank you for the link to Jeff Janis. As a fellow Sad Dad Music Enjoyer (https://bsky.app/profile/atherton.bsky.social/post/3kwujyvsnnz22), this is great!